Build graph-native RAG with cognee and Amazon Neptune Analytics

This July, at the AWS Summit in New York, AWS called attention to the need of customers to move beyond experimentation to production-ready, explainable AI systems. Retrieval-Augmented Generation (RAG) remains central to that journey—but conventional pipelines often lose context across documents and create operational overhead by means of synchronizing data across separate graph and vector stores.

With Amazon Neptune Analytics, customers can store embeddings directly on graph nodes and query them with openCypher, benefitting from both semantic recall and multi-hop graph traversal in a single managed service. In this post, we show how cognee—an open‑source AI memory engine—integrates with Neptune Analytics to deliver graph-native retrieval for RAG—without juggling two backends.

Related launches to explore:

• Amazon S3 Vectors (preview) for cost-efficient, native vector storage at scale—ideal for long-term vector retention feeding RAG.

• Amazon SageMaker AI – Nova customization to tailor foundation models across the training lifecycle, then deploy to Amazon Bedrock.

Solution overview

-

Model your domain once. cognee turns raw data into a semantic graph of Pydantic DataPoint objects, and lets you choose which fields to embed for semantic search.

(Partner capability for graph construction; runs anywhere.)

-

Store embeddings on the graph. Amazon Neptune Analytics persists embeddings on nodes and provides vector algorithms callable from openCypher (for example, top-K by embedding with filters), so you can traverse relationships and retrieve semantically similar content in the same query.

-

Operate one backend, not two. A single hybrid adapter points both your graph and vector configurations at Neptune Analytics—reducing moving parts and keeping security, scaling, and monitoring under a single AWS control plane (IAM, CloudWatch, etc.).

What this gets you

- End-to-end GenAI readiness: With graph + vectors in one engine, teams can go from ingestion → indexing → RAG queries without extra ETL pipelines or middleware—shortening prototype-to-production timeframes.

- Performance & cost efficiency: Eliminates a second vector store and cross-system synchronization, reducing infrastructure cost and lowering query latency.

- Better explainability & trust: Every AI-generated answer can be traced back through the graph to its source documents and the intermediate relationships, helping with compliance and auditability.

Why Amazon Neptune Analytics

Neptune Analytics is built for investigative and analytical graph workloads and adds native vector search directly into your openCypher queries. That means you can:

- Upset and query embeddings on nodes,

- Run top-K similarity with filters in openCypher, and

- Traverse multi-hop relationships for explainable answers—all in one place.

This approach aligns with customers building explainable RAG: semantic recall for breadth, plus graph structure for precision, provenance, and multi-hop reasoning. For model choice and tuning, Amazon SageMaker AI now supports Nova customization across pre-training and post-training (including PEFT and full fine-tuning), with seamless deployment to Amazon Bedrock—so you can pair custom models with graph-native retrieval on AWS.

For durable, cost-optimized vector retention, Amazon S3 Vectors (preview) introduces vector buckets with native APIs which can store and be used to query large vector datasets—integrated with Amazon Bedrock Knowledge Bases and Amazon OpenSearch Service for tiered vector strategies.

Use Neptune Analytics for low-latency graph + vector retrieval, and S3 Vectors for long-term, low-cost vector storage that feeds RAG and agent memory.

What is cognee

cognee is an open-source AI memory engine that builds a knowledge graph over heterogeneous data (from 30+ file formats) and generates embeddings for the fields you choose—so you can retrieve by both structure (graph traversal) and semantics (similarity). It fits cleanly into AWS RAG stacks:

-

Data modeling with DataPoints. You define Pydantic models; cognee turns them into nodes (and informs edges). Fields listed in the metadata index_fields are embedded for semantic search.

-

Pipelines & tasks. Extensible, parallel pipelines for ingestion and enrichment:

- add (ingest),

- cognify (chunk → extract → summarize → write),

- codify (code analysis → graph write).

Insert custom Python tasks as needed.

-

Isolation & scoping. Use node sets (e.g., team, tenant, project) to scope search and retrieval to logical groupings.

-

Multiple retrieval modes. 10+ search types for different needs:

- SUMMARIES — concise topical overviews

- INSIGHTS — graph‑focused relationships

- CHUNKS — precise text snippets

- RAG_COMPLETION — traditional retrieval‑augmented generation

- GRAPH_COMPLETION — graph‑aware generation

- GRAPH_SUMMARY_COMPLETION — generation over graph summaries

- GRAPH_COMPLETION_COT — chain‑of‑thought over multi‑hop traversals

- GRAPH_COMPLETION_CONTEXT_EXTENSION — iterative context expansion

- CODE — code‑artifact retrieval

- CYPHER — raw Cypher queries against graph backends

- NATURAL_LANGUAGE — natural language to Cypher

- FEELING_LUCKY — exploratory retrieval

-

Domain alignment (optional). Provide RDF/OWL ontologies to map entities and properties onto domain vocabularies; the resulting ontology subgraph is merged into the knowledge graph for consistency.

Where this fits on AWS

- Pair cognee for graph construction with Amazon Neptune Analytics for graph + vector retrieval in openCypher with no separate vector store to operate or synchronize.

- Use Amazon SageMaker AI to customize models (e.g., Amazon Nova) and deploy to Amazon Bedrock for generation.

End-to-end example: cognee + Neptune Analytics

Goal: Use Neptune Analytics as a single backend for both the graph and vectors, with cognee handling ingestion and enrichment, and run retrieval via graph traversal + similarity.

Clone the cognee repo and see cognee/notebooks/neptune-analytics-example.ipynb for the full, executable version.

Stack summary

- Ingest & enrich with cognee → build graph from heterogeneous data.

- Store embeddings on nodes in Amazon Neptune Analytics.

- Query in openCypher with semantic similarity + traversal for explainable RAG.

- (Optional) Use Amazon Bedrock for generation; use Amazon S3 Vectors for low-cost long-term vector retention feeding RAG.

Prerequisites

- An Amazon Neptune Analytics instance (public connectivity or VPC access configured).

- IAM permissions on the target graph identifier:

- neptune-graph:ReadDataViaQuery

- neptune-graph:WriteDataViaQuery

- neptune-graph:DeleteDataViaQuery

- Vector dimension configured to your embedding model's output (e.g., 1536 for text-embedding-3-small).

- AWS credentials available via environment variables, AWS profile, or explicit keys.

- (Optional) Customize the Nova model and deploy to Amazon Bedrock for downstream generation.

Example environment variables:

Environment & directory setup

Configure Neptune Analytics for both graph + vectors

Why this matters (ops): One backend, one IAM model, unified monitoring—no cross-system sync.

Optional: clean slate

Ingest sample data & build the graph

Embeddings provider: Configure cognee to use Amazon Bedrock embeddings (e.g., Titan Text Embeddings V2) so vector dimensions align with your Neptune Analytics setting.

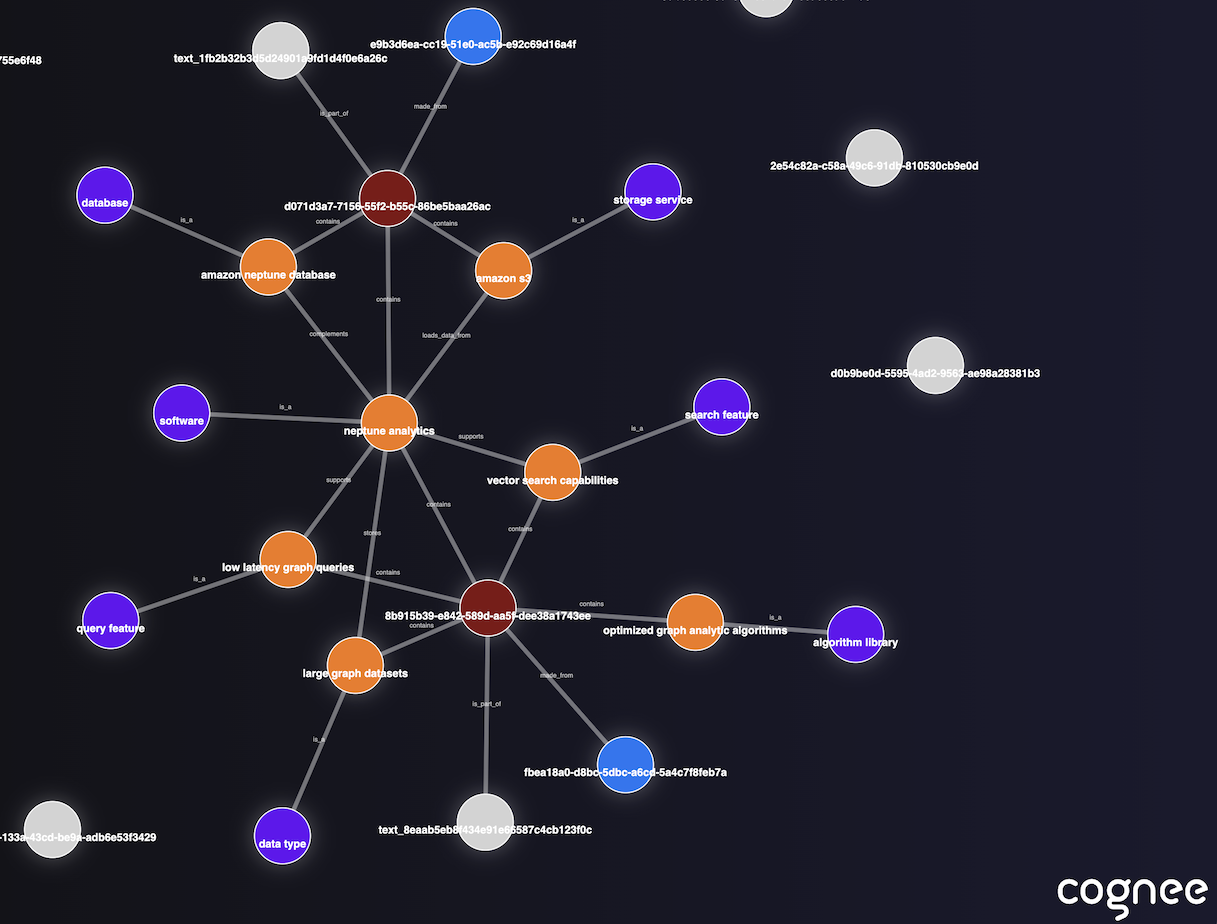

Visualize the graph

Retrieve: graph-aware and semantic

openCypher vector search (conceptual): In Neptune Analytics, you can perform top-K by embedding with filters directly in openCypher, then continue a multi-hop traversal in the same query.

Tiered vectors (optional): Keep long-lived vectors in Amazon S3 Vectors (preview) and use OpenSearch for real-time KNN on hot subsets.

Configure Amazon Bedrock embeddings in cognee (example)

The snippet below shows a minimal way to align cognee with Amazon Bedrock for embeddings, so your embedding dimension matches the Neptune Analytics vector configuration. Treat this as a template—adjust environment variables and provider names per your environment.

Notes:

- Confirm the output dimension of your chosen Bedrock embedding model (e.g., Amazon Titan Text Embeddings V2) and configure the Neptune Analytics vector dimension accordingly.

- If you plan to tier vectors, use Amazon S3 Vectors for durable, low-cost storage integrated with Bedrock Knowledge Bases, and rely on Neptune Analytics for low-latency graph + vector retrieval.

When to use this integration

Choose cognee + Amazon Neptune Analytics when you need:

- Explainable RAG. Combine semantic similarity with multi-hop reasoning to return grounded answers and show how facts connect.

- A single managed backend for graph and vectors. Reduce moving parts with one IAM model and unified monitoring.

- Investigative & analytical workflows. Fast iteration over connected data (exploration, hypothesis testing, relationship discovery).

- Performance & cost efficiency. Co-locate embeddings with relationships to lower latency and avoid running a separate vector store.

Additional resources

- The adapter implementation can be found by searching NeptuneAnalyticsAdapter in our repository

- Example notebook: Neptune Analytics end‑to‑end walkthrough

What OpenClaw is and how we give it memory with cognee

Context Graphs: Why Agent Memory Needs World Models and Behavioral Validation