Why Agent Memory Breaks (and How to Fix It)

Most teams building agents hit the same wall.

Agentic systems may seem simple on the surface. But behind the scenes, each task can branch into hundreds of intermediate thoughts, tool calls, and feedback loops. That creates easily 100x more logs per task than a classic request-response app.

The default fix is to log everything and embed it. At first, vector search over logs and documents feels magical. Then traffic grows and logs explode. Retrieval becomes slower and more expensive, context feels noisy and unpredictable, and different agents keep re-learning the same things.

So what should memory be, if not an endless junk drawer?

In this blog, I'll show you how Cognee draws on neuroscience to build self-improving agent memory. This describes Cognee's near-term roadmap, grounded in Bayesian Brain and Predictive Coding theories, where memory supports better prediction and action.

A Solution Inspired by Neuroscience

Let's assume a probabilistic World Model, which you need to continuously calibrate.

The Bayesian Brain Theory says the brain maintains this model, which is a compressed set of beliefs about hidden causes and what is likely to happen next, and updates it when evidence arrives.

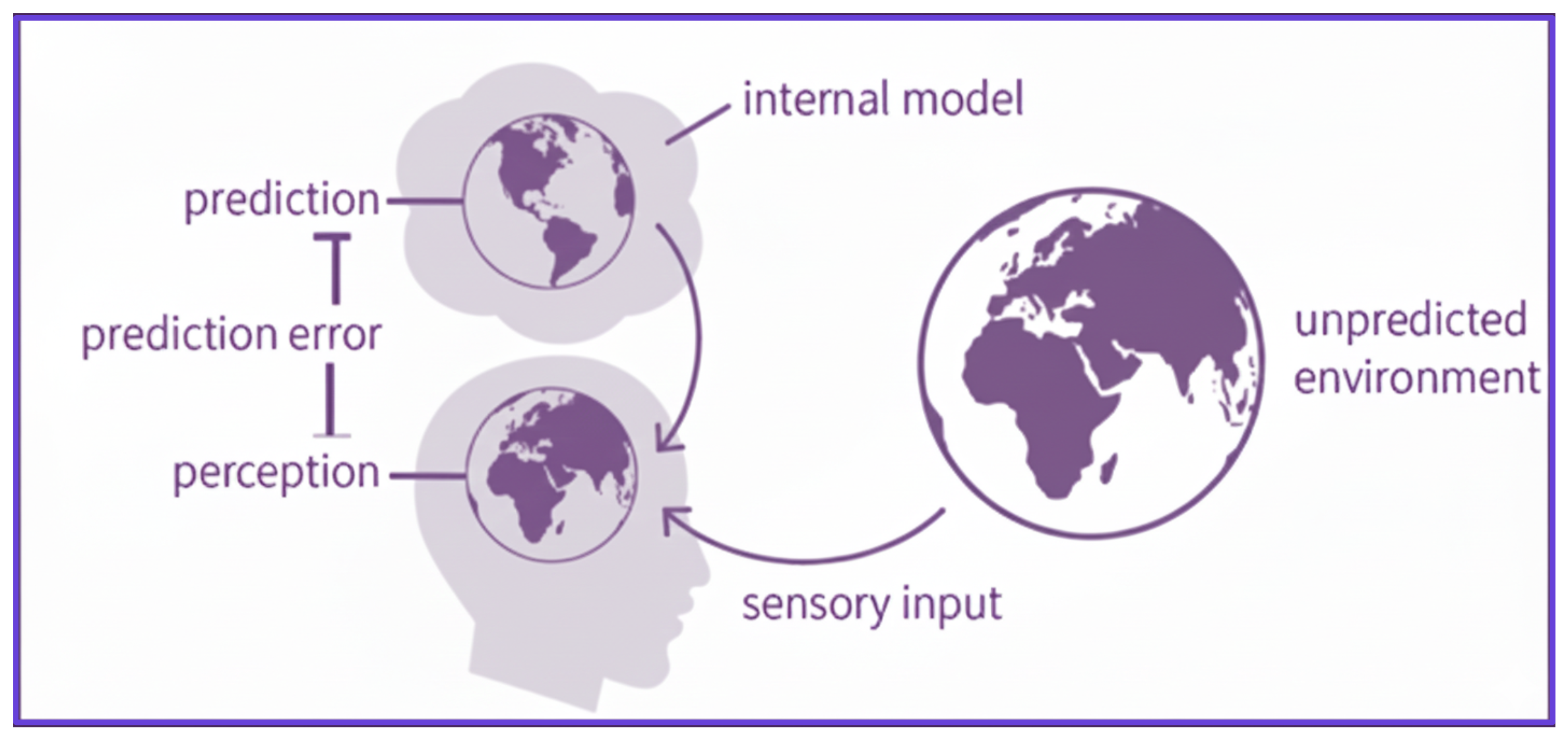

Figure 1: An internal generative model produces predictions, the world provides sensory evidence, and prediction errors update the model into a better posterior.

This is a clean “inference loop” visual that matches your line about the brain updating beliefs about hidden causes as new evidence comes in. It is dependent on the definition of “world model” and before introducing predictive coding.

The Predictive Coding Theory comes after, as updating mechanism: the brain constantly predicts its next inputs and learns mainly from prediction error. Memory is valuable when it reduces surprise about the future under tight resource limits.

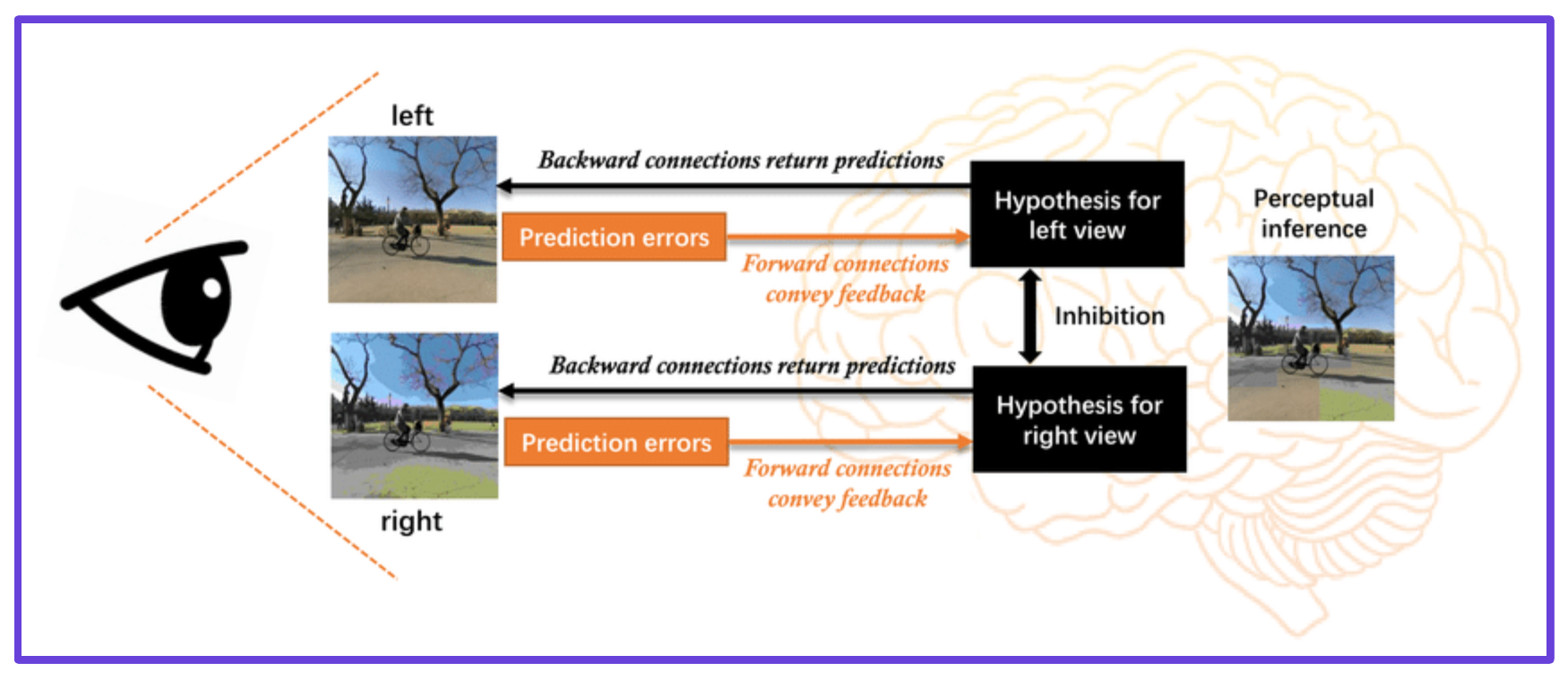

Figure 2: The competition between two brain “guesses” about what you’re seeing: one based on the left eye and one on the right.

Each guess sends a top-down prediction of what the sensory input should look like, and the actual input from each eye sends a bottom-up prediction error showing the mismatch.

The brain updates the guesses to reduce these errors, and whichever guess explains the input better suppresses the other. Your perception is simply the guess that currently minimizes prediction error best, which is why it can flip when the competing errors change.

The World Model you're creating is not a full log of the past. It is an abstract guess about how situations unfold. Given your current state and action, what should you expect next? All the details that do not help your prediction are folded away.

That folding happens through traces. Where one trace is one concrete record of an episode, multiple trace theories say a single memory is really a family of overlapping traces, because each experience and recall lays down another variant. Over many traces, the brain consolidates schemas, compact summaries of how things usually go.

What If Logs Became Traces?

Cognee's plan applies the above theories to agent memory. Every short trajectory becomes a session trace with four streams: text observations, environment and tool context, actions, and outcomes or rewards. At the graph level, the same episode induces a subgraph, the nodes and edges touched during the interaction. A session trace is the induced subgraph plus the multi-stream record.

As traces accumulate, Cognee clusters similar trajectories into episodic patterns, then compresses those into semantic abstractions. The result is meta representations and meta subgraphs, meta nodes that stand for whole classes of situations rather than single events.

Here is the criterion.

A good higher level memory is a compact World Model that predicts future events by focusing on features critical for predicting next states given the current state and action. Meta nodes are latent variables in that predictive model, not just human friendly summaries.

If you want a quick visual, think of the memory stack like this:

Prediction, however, is not the whole story.

Why Prediction Alone isn’t Enough

Real deployments have many actors—such as automated copilots, background daemons, and human-in-the-loop operators—that share the same environment and the same memory, with different tools and objectives. Each actor is solving its own reinforcement learning like control problem. Shared memory must support expert-like behavior across all of them.

Therefore, Cognee's plan optimizes memory like an RL component, where actors use memory while doing real work. A learned critic evaluates memory configurations by scoring the multi-actor behavior they induce. (Parts of this are already implemented in memify, with the full critic-guided system coming soon.) Two memories can predict equally well, but only one makes multiple actors behave like experts.

Actor-Critic Memory Optimization

In practice, the loop is simple and very practical.

Picture a large IT helpdesk where employees chat with an AI assistant before tickets reach humans.

- The agents are the assistant and the human operators.

- The environment is your ticketing system plus documentation, HR systems, and device inventory.

- The critic is a model that scores whether the overall behavior looks healthy.

Cognee does not tell the assistant what to reply; it watches which parts of memory were used in successful resolutions and which parts correlate with loops and failures. It then nudges the memory layer to promote traces used in clean, one-touch resolutions, demote or reorganize the ones tied to repeated failures, and keep capacity focused on patterns that clearly move the needle.

This reflects a parallel from neuroscience, where some systems propose actions and others provide reward and error signals.

The agent–critic structure in Cognee is a practical engineering version of that separation. The critic does not rewrite source documents or delete raw logs. It reshapes only derived memory: traces, meta traces, embeddings, and their weights. Raw ingested content stays intact. Effective memory, what actors actually see, keeps improving.

Here is a table comparing the naive approach to our tracing and critic method.

| Category | Naive log and embed | Cognee trace and critic memory |

|---|---|---|

| Storage | Stores every step forever | Stores episodes as traces, then consolidates |

| Cost | Costs grow without bound | Compression keeps size stable |

| Retrieval | Retrieval gets noisy | Meta nodes surface the right structure |

| Behavior | Prediction may improve but behavior can degrade | Critic validates abstractions by behavior |

A Practical Example:

Consider a situation where thousands of password reset sessions across logs and tickets are a growing pile in a naive vector store. In Cognee's planned system, each session becomes a trace. Those traces cluster into a few canonical workflows. Meta nodes capture the usual steps and outcomes. The critic verifies that actors relying on them remain effective. You get fewer memory pieces, faster retrieval, lower cost, and behavior that stays expert-like.

Our Roadmap to Better AI Memory

This is not theoretical. Memify already runs inside Cognee today on top of the knowledge graph produced by Cognify. It already cleans stale nodes, strengthens important links, optimizes embeddings, and improves retrieval without full reindexing. The roadmap below extends this foundation, making consolidation explicitly predictive and critic-guided—this is Cognee's near-term plan, not yet fully implemented.

This is not theoretical. Memify already runs inside Cognee today on top of the knowledge graph produced by Cognify. It already cleans stale nodes, strengthens important links, optimizes embeddings, and improves retrieval without full reindexing. The roadmap below extends this foundation, making consolidation explicitly predictive and critic-guided—this is Cognee's near-term plan, not yet fully implemented.

Stage one moves from blobs to timelines.

Retrieval becomes episode reconstruction. Instead of “give me related nodes,” Cognee builds a timeline of what happened, step by step, linked back to the graph regions touched. That yields rich trace clouds ready for pattern discovery and critic learning.

Stage two moves from traces to patterns.

Memify groups similar trajectories by how they unfold over time and proposes higher level abstractions for each cluster. The critic acts as a safety net. After we compress traces under a candidate abstraction, do actors still behave like experts and do task metrics stay stable or improve? If yes, the abstraction survives. If not, it is refined or rolled back.

Stage three reshapes long term memory.

Accepted meta nodes become durable graph structures. Regions well explained by these abstractions are slimmed down through archiving, compression, or sampling. Redundant structures that behave identically under both prediction and the critic are merged into more compact forms.

The Payoff: Faster, Smarter Agents

Deploying Cognee gives you shared memory that improves the longer it runs. It compresses aggressively but only where it is behaviorally safe. It enables expertise transfer across workflows because abstractions learned in one place are validated before reuse elsewhere.

Because Cognee sits at the AI memory layer, you can apply it immediately to existing logs and legacy data, then specialize the critic with domain rewards for safety, compliance, satisfaction, or cost.

If your agents feel slow, noisy, or forgetful, that is rarely a prompt problem. It is a memory architecture problem.

Cognee's project addresses this with a brain-inspired predictive objective and critic-guided consolidation, so your system keeps getting smarter by standing on continually optimized memory. While parts of this vision are already working in Memify, the full trace-based, critic-guided system described here is Cognee's near-term plan.

Cognee Raises $7.5M Seed to Build Memory for AI Agents

What OpenClaw is and how we give it memory with cognee