Connecting Models to Memory: Introducing cognee MCP for Universal AI Access

AI is only as useful as its ability to connect to the tools and data that power real-world workflows. That’s why we’ve brought Model Context Protocol (MCP) to cognee—an open, principled standard that lets models securely discover, query, and extend external knowledge, providing a unified, auditable way to interact with resources beyond a single session.

In practice, MCP provides a protocol layer for secure, typed tool calling. Now available in our open-source core, cognee MCP turns AI memory from a transient buffer into a durable semantic layer that agents can access directly.

MCP: The Open Standard for AI Tool Integration

MCP (Model Context Protocol) enables AI models—like those from OpenAI or Anthropic—to interact with external tools, data, and services in a structured, secure way. It defines how models can discover available resources, execute functions, and handle results consistently.

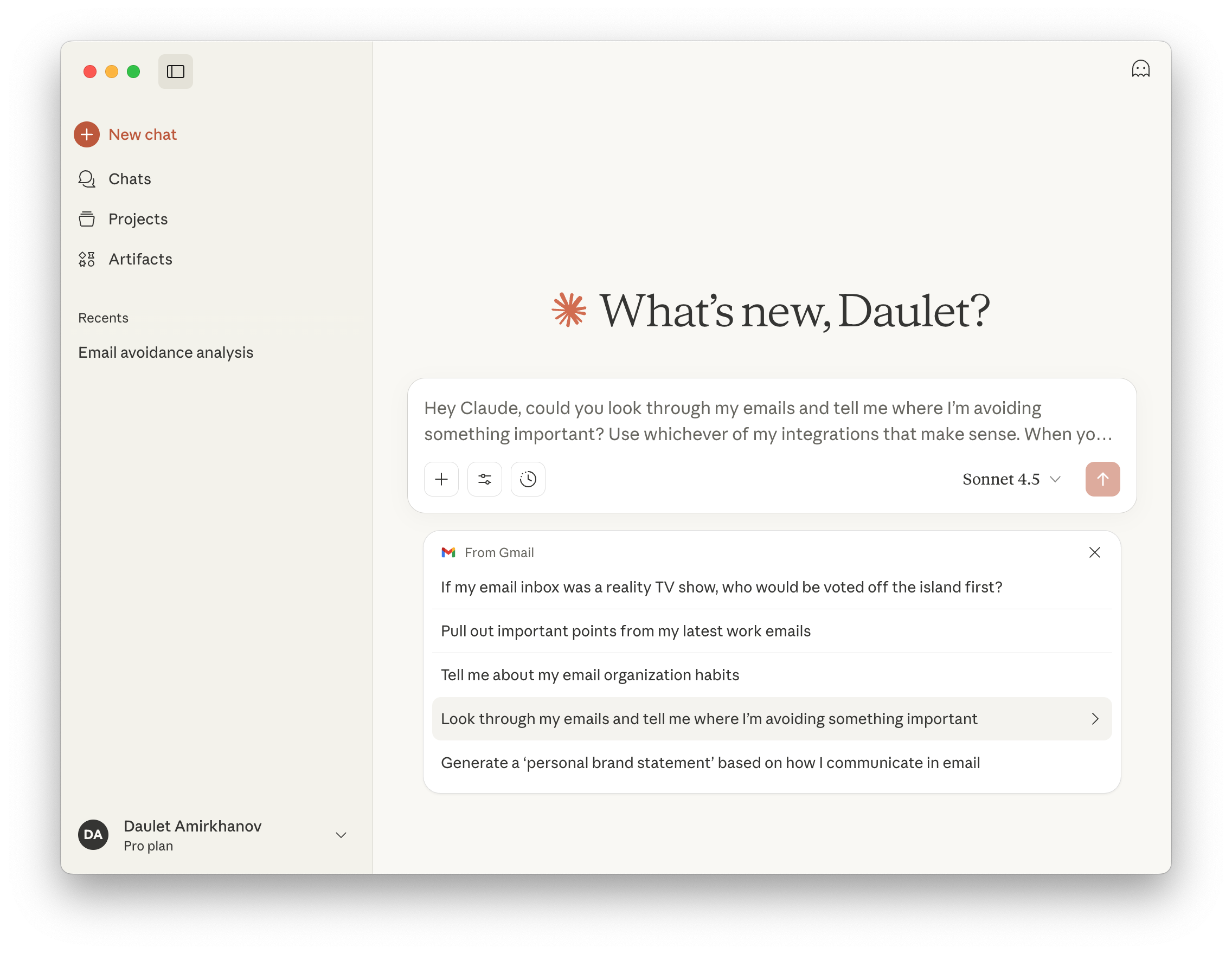

In a way, it’s the REST equivalent for AI: a protocol that ensures interoperability without custom hacks. Anthropic's Claude pioneered MCP implementation in 2024, allowing models to query emails, tasks, or calendars with proper permissions.

With MCP, integrations become effortless: grant access to Gmail for context-aware searches, analyze Asana tasks for prioritization, or summarize Google Calendar for planning. The possibilities extend to any service, which all synergize into a more connected AI ecosystem.

cognee MCP builds on this by linking our AI memory engine to frameworks like LangGraph or MCP clients from OpenAI and Anthropic. It acts as a protocol bridge, letting agents tap into cognee's graphs for enhanced semantic data layer analysis via structured queries that surface entities, relationships, and insights.

Use Cases for cognee MCP

We’ve added MCP support to further extend cognee’s UI and turn cognee into a protocol layer that your agents and tools can call directly inside real workflows.

By enabling communication without a web UI or SDK, cognee MCP unlocks powerful integrations for agent systems, with persistent memory supporting multi-step reasoning and decision-making.

Here are a few concrete applications of this implementation:

1. Integrating with AI assistants (Claude, Cursor, etc.)

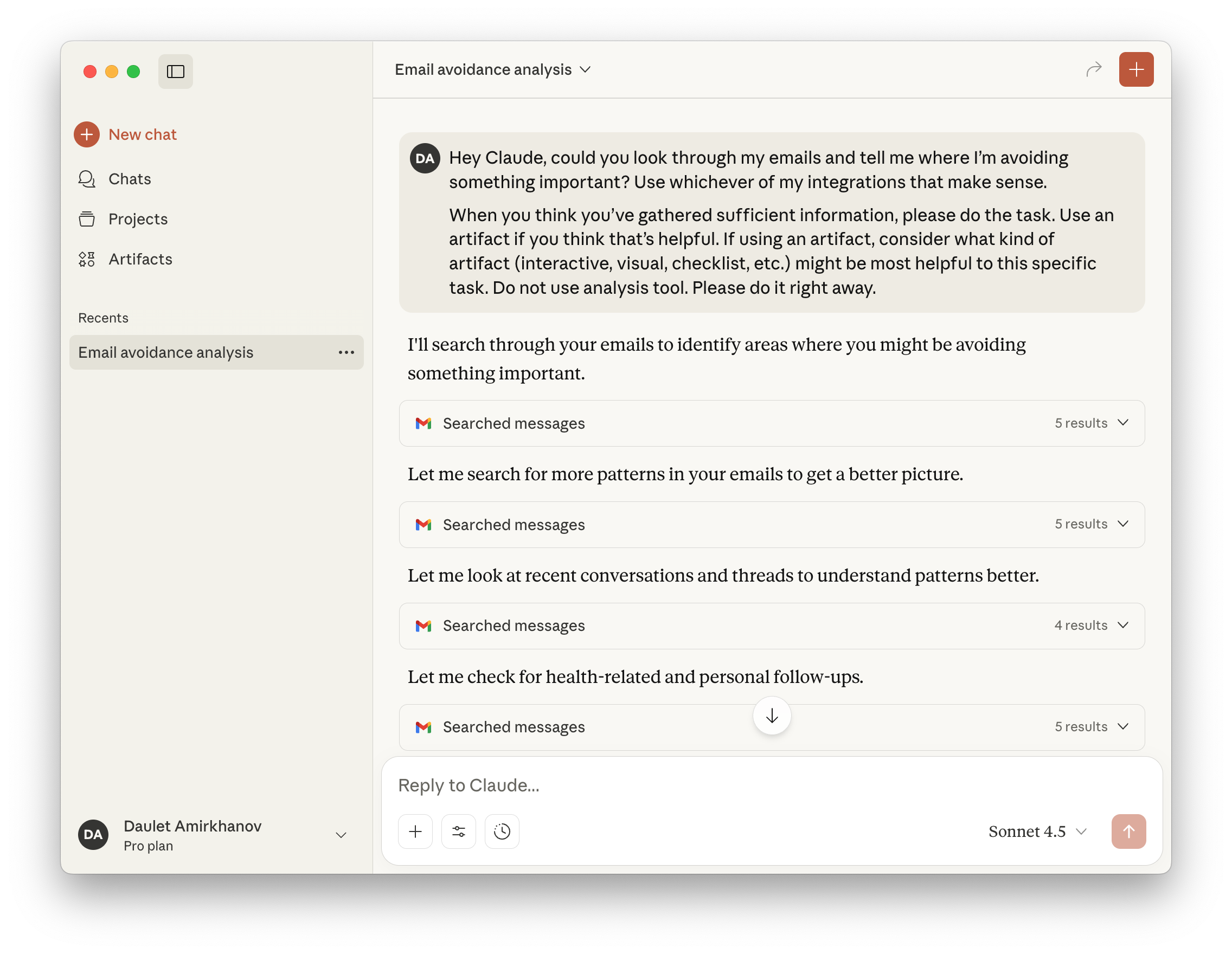

Connect cognee MCP to AI assistants like Claude Desktop or Cursor so that they can:

- Search across your entire cognee knowledge base.

- Summarize documents stored in your cognee memory.

- Retrieve and cite nodes or relationships from your graph.

Example:

You’re debugging a project, and ask Claude:

“What did I write about the MCP authentication flow last week?”

Claude queries your cognee MCP server, finds the note, and summarizes it inline—no copy-pasting or local indexing needed.

In software development, this enhances context engineering by layering semantic analysis over code repositories.

2. Local Graph Memory for LangGraph or Custom Agents

You can connect cognee MCP as a memory backend for any agent framework that supports the Model Context Protocol.

This lets your agent:

- Ingest data (cognee.add)

- Retrieve context (cognee.search)

- Use graph traversal results

All without manually wiring SDKs or database adapters.

Example:

An AI assistant maintains a persistent memory across sessions using cognee MCP.

When you restart it, it still remembers your research notes, project graphs, and prior interactions.

We’ve actually built a hands-on integration with LangGraph that demonstrates this exact use case in depth. In that example, our agent interacted with cognee tools directly rather than through MCP, but the concept is identical: persistent, graph-based memory that AI can query and build upon over time.

3. Centralized Knowledge Access for Multiple LLMs

cognee MCP allows multiple AI agents (Claude, GPT-4, local Llama) to talk to the same cognee instance through a shared protocol.

So, instead of maintaining separate embeddings or context stores for each model, you unify them behind a single cognee memory endpoint.

Example:

Your GPT-4 workspace uses cognee MCP to search business documents, while your Claude agent uses the same memory to summarize daily progress - all accessing the same source of truth.

This streamlines agent systems in enterprise settings, where semantic data layer analysis demands consistent, time-aware retrieval.

In essence, cognee MCP makes cognee a universal interface, bridging graph retrieval and agent reasoning for leaner, more intelligent applications.

Core Features: What cognee MCP Offers

cognee MCP exposes a collection of standardized tools that enable AI agents to read from, write to, and reason over cognee’s knowledge graph through the Model Context Protocol.

Knowledge Ingestion

- cognify - Processes raw text or documents into a structured knowledge graph by extracting entities, relationships, and semantic connections.

- save_interaction - Captures user-agent interactions, converts them into graph knowledge, and generates new coding rules from the conversation context.

These tie into all stages of cognee’s ECL pipeline, ensuring scalable semantic layers.

Querying and Retrieval

- search - Performs queries across the knowledge graph with multiple specialized modes:

- GRAPH_COMPLETION - LLM reasoning with graph context

- RAG_COMPLETION - Chunk-based document retrieval

- CHUNKS - Semantic text matching

- CODE - Code-aware search and analysis

- SUMMARIES - Access pre-generated hierarchical summaries

- CYPHER - Execute direct Cypher queries

- FEELING_LUCKY - Automatically selects the most suitable search type

This supports agent systems with precise, context-engineered responses.

Data and Rule Management

- list_data - Lists all datasets and data items stored in cognee memory, including their IDs.

- delete - Removes specific data items from the graph (supports both soft and hard deletion).

- prune - Performs a full memory reset, clearing all data and metadata.

These tools make cognee MCP a robust extension for AI memory, fostering modular, extensible systems.

Ready to Dive In? Getting Started with cognee MCP

Implementation is simple, whether using the standalone or built-in config.

Standalone Configuration

To connect cognee MCP directly to an MCP-compatible client, add the following entry to your client configuration (for instance, .claude/config.json):

This tells the client to launch the cognee MCP server automatically using uv run, exposing it as a local Model Context Protocol endpoint.

Built-in Mode

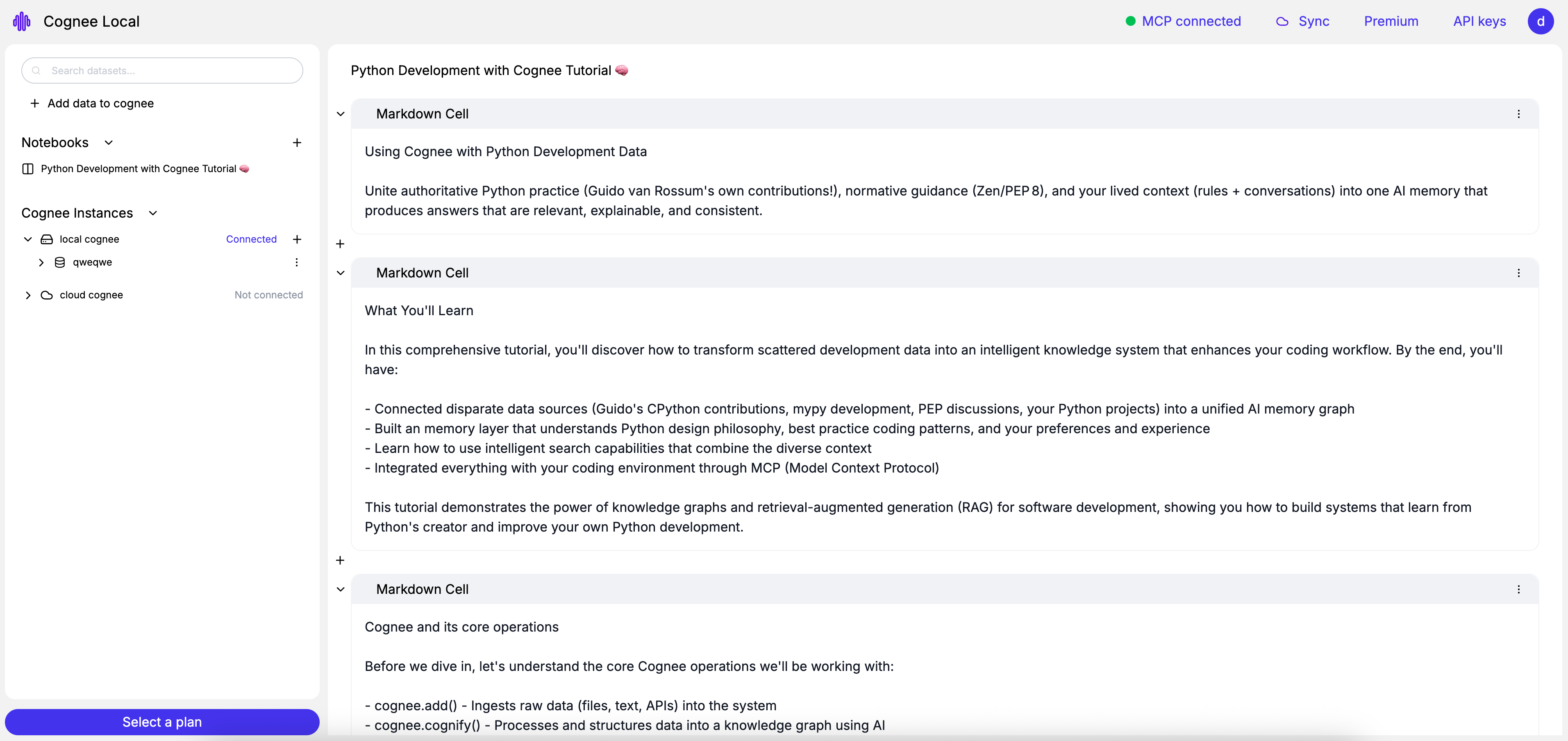

Starting with cognee v0.3.5, MCP support is included out-of-the-box.

Simply run:

This command starts the full cognee stack—both the frontend and backend—along with a dedicated MCP server that can act as an interface for any MCP-compatible client to interact with cognee.

It launches both the cognee UI and the MCP server, allowing you to work with your graph memory through the browser interface and connect from any AI assistant that supports the Model Context Protocol.

How to know if the MCP server is up and running? Since v0.3.5, the cognee UI displays MCP status directly in the top header. If you see “MCP connected,” your server is active and ready to accept requests from connected agents.

Ready for Persistent, Graph-based Agent Memory?

MCP turns cognee into a standard, typed gateway between agents and durable memory—elevating it from a standalone app to an interface layer your agents can build on. Once enabled, your memory graph isn’t tied to a single environment; it becomes part of your AI’s active working context. The result? Cleaner integrations, auditable access, and cross-model interoperability—without custom glue code.

Here’s a quick recap of how to enable the implementation:

1- Update to version 0.3.5 or later to get cognee MCP included automatically.

2- Start your local instance by running:

3- Watch for the “MCP connected” indicator in the UI header to confirm your MCP server is active.

4- Connect your AI assistant (Claude, Cursor, LangGraph, etc.) - they can now query and extend your cognee memory directly through MCP.

Bridging models and tools with MCP doesn’t just enhance workflows—it unlocks new possibilities for context-aware applications across development, management, and beyond. If you’re exploring multi-agent workflows or moving prototypes toward production, this is the fastest path to make memory a first-class, shareable capability across your stack.

Cognee Raises $7.5M Seed to Build Memory for AI Agents

What OpenClaw is and how we give it memory with cognee