Vector Databases Explained: A Smarter Way to Search by Meaning

In today’s AI-driven world, vector databases are becoming essential tools for working with unstructured data. But what exactly are they—and why are they so important?

At a high level, vector databases allow us to search data based on conceptual similarity rather than exact keyword matches. Instead of looking for literal terms, they let us find content that means the same thing—making them incredibly useful for semantic search, recommendation engines, and other AI-powered applications.

They build on the foundation of vector indexes (like FAISS), but go much further—adding all the features you’d expect from a full-fledged database: data storage, updates, filtering, security, scalability, and more.

As companies increasingly embed text, images, and other content into high-dimensional vector form, the need for systems that can store and query billions of these embeddings efficiently has exploded. Vector databases are designed specifically to solve that problem.

By the end of this post, you’ll have a clear understanding of what vector databases are, how they work, and when you might want to use one in your own projects.

What is a Vector Database?

Also referred to as a vector store, vector-based database, or vector search database, a vector database is a specialized system built to store and retrieve data represented as high-dimensional vectors. These vectors (often called embeddings) are numerical representations of complex data like text, images, or audio. They are generated by a machine learning model that transforms raw input into points in a latent vector space, where content similar by context lives close together.

When a user submits a query (e.g., a block of text or an image), the system can compare the query’s vector to those already stored, returning results ranked by exact match (like an SQL equality check), but by conceptual similarity. This enables powerful semantic search experiences, for example, taking a photograph with your phone and having it find similar images, even if nothing in the filenames or document properties matches.

This kind of comparison is extremely powerful but also computationally intensive, especially at scale. Traditional relational databases or keyword search engines aren’t designed for this type of search. Vector databases, however, are. They use specialized indexing methods—such as k-nearest neighbor (k-NN) indexes and algorithms like HNSW (Hierarchical Navigable Small-World graphs) or IVF (Inverted File Index)—to make similarity search fast and efficient, even across millions of vectors.

But—vector databases don’t just store the bare vectors; they usually also index the file’s metadata (IDs, labels, timestamps, tags, etc.). This enables hybrid search, which combines semantic similarity with traditional filtering; for example, it allows searching for documents similar to a query but only returning results written in English or published after a certain date. We’ll see later how these two indexes work together in the query process.

How Do Vector Databases Work?

Before diving deeper, though, let’s outline the typical workflow in a vector database. We’ll use a running example to make it concrete: imagine we're building a photo search application. We want users to upload a photo and instantly be able to find similar images—even if there are no matching keywords or tags.

To make this happen, a vector database follows two main workflows:

1. Ingesting Data: Turning Content into Vectors

Step 1: Creating Embeddings

First, we start with an embedding model—usually a machine learning model like a neural network. Its job is to turn our content (photos, in this example) into numerical vectors. For images, we might use a pre-trained convolutional neural network (CNN). When we feed a photo into this model, it outputs a vector like [0.02, -1.15, …, 0.47], capturing key visual features of the image.

Items with similar content—like pictures of beaches—end up closer together in this vector space. Meanwhile, completely different images (like cityscapes or forests) occupy different areas of the space. Each image that has attached metadata—say, an ID, the image file path, the date and/or location of the shot, and maybe even the photographer’s name—will also have it embedded.

Step 2: Storing Vectors & Metadata

Once we've got these vectors, we store them in our database alongside the files’ related metadata (where available). Contextually similar items will end up near each other in this space in the high-dimensional embedding space (also called latent space) they are stored in.

The combination of the embeddings and metadata lets us perform powerful searches later on—for example, finding photos similar to a user's query while also filtering by specific criteria (like location or date). However, efficient querying also requires building indexes.

Step 3: Indexing Vectors & Metadata

Vector querying can get slow if the task involves comparing millions of items. To fix that, vector databases build special indexes. These indexes organize vectors so the system can quickly find the closest neighbors without checking every single vector each time.

Some popular indexing methods include:

-

Random Projections: A dimensionality reduction technique that projects high-dimensional vectors into a lower-dimensional space using random matrices.

✅ Good for reducing computational cost while approximately preserving distance relationships.

-

Product Quantization (PQ): Splits vectors into smaller sub-vectors and quantizes each independently, enabling compact storage and fast approximate distance calculations.

✅ Great for large-scale similarity search with limited memory usage.

-

Locality-Sensitive Hashing (LSH): Hashes similar vectors into the same “buckets” with high probability, enabling fast lookups by comparing only within matching buckets.

✅ Useful for high-speed approximate search in very high-dimensional spaces.

-

HNSW (Hierarchical Navigable Small-World) Graphs: Builds a multi-layer graph where vectors are connected to their nearest neighbors, enabling efficient navigation and approximate search.

✅ Excellent for real-time, high-accuracy nearest neighbor search at scale.

-

IVF (Inverted File Index): Divides the vector space into clusters and only searches within the most relevant clusters at query time.

✅ Ideal for speeding up searches in massive vector datasets with moderate accuracy trade-offs.

At this stage, our database has two key indexes:

- Vector Index: Quickly identifies vectors that are conceptually similar.

- Metadata Index: Efficiently filters results based on structured data.

Many vector databases are built to handle large volumes of data efficiently. Some systems keep recent or frequently accessed vectors in RAM for faster querying, while offloading the rest to disk to accommodate larger datasets. Others separate the index (often in memory) from the raw vector data (which they keep on disk or SSD).

While implementation details vary, the core idea remains the same: the storage layer reliably preserves both vectors and metadata, ensuring it can survive crashes and enabling efficient retrieval later.

2. Querying Data: Finding Similar Items

Once our vectors are stored and indexed, here's how we search:

Step 1: Formulating the Query (Data + Context)

If a user uploads a new photo and wants to find similar images from a specific location, their query will consist of:

- Content (the uploaded image itself)

- Metadata filters (like “location = beach”)

Step 2: Embedding the Query

Before searching, the user's image query is transformed using the same embedding model as earlier. This produces a query vector in the same latent vector space as the stored data.

Step 3: Performing Vector Similarity Search

Now, the database uses its vector index to rapidly identify the closest vectors to the query vector. Rather than checking every stored image (which would be slow), it uses advanced algorithms, such as Approximate Nearest Neighbor (ANN) search methods, to quickly explore only the most relevant areas of the vector space.

Step 4: Applying Metadata Filtering

If the user specified metadata filters (like location), the database uses the metadata index to narrow down results further. Some databases apply metadata filters before searching for similar vectors (pre-filtering), while others filter results afterward (post-filtering). Each approach has trade-offs:

- Pre-filtering is faster but might exclude some relevant items early.

- Post-filtering ensures accuracy but can retrieve more vectors upfront, slowing the search.

Many vector databases offer both approaches or a more flexible hybrid solution.

Step 5: Result Retrieval

Finally, the database returns the items identified as most similar, respecting any filters applied. In our photo search example, users instantly see images that visually resemble their query image and match the requested criteria (like photos taken at the beach or at a specific beach).

Vector Database Use Cases

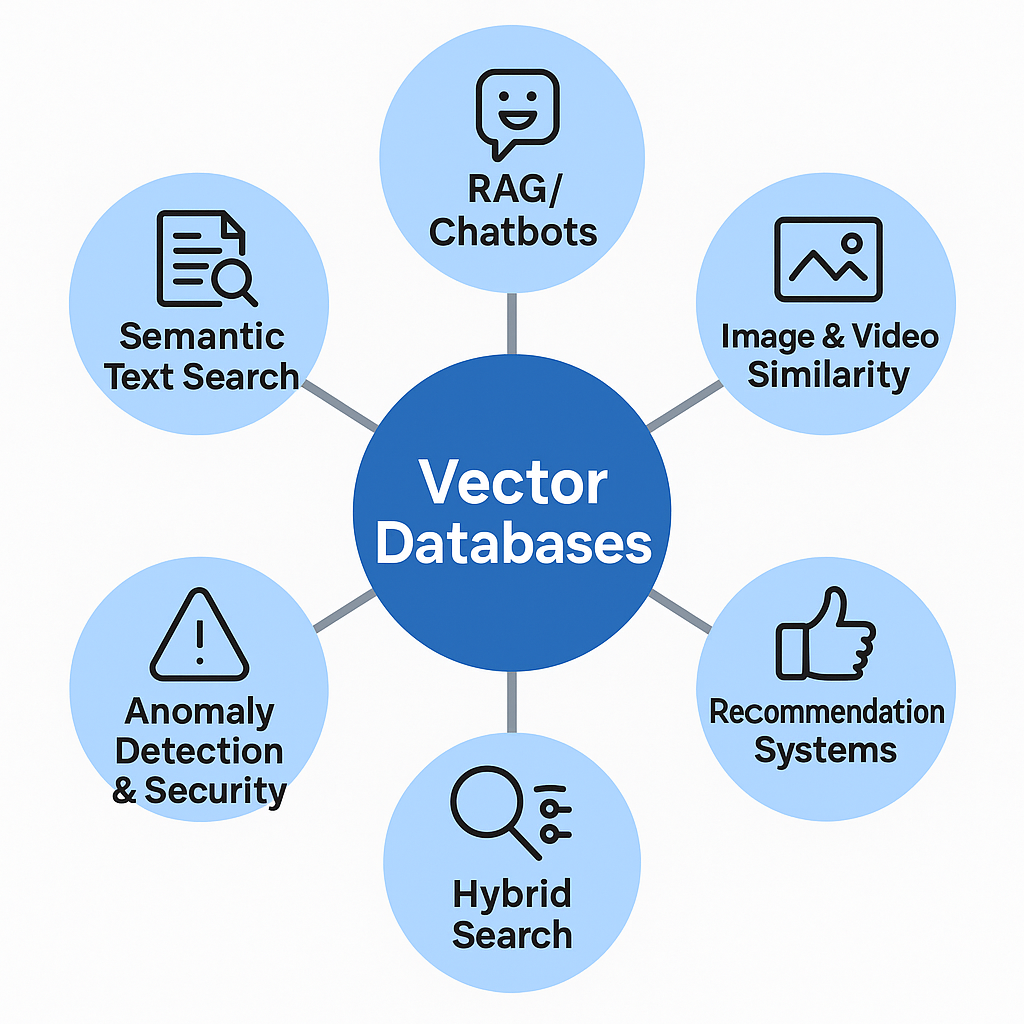

Vector databases unlock a wide range of applications that go far beyond what traditional keyword or relational databases can handle. Anywhere you need to search by meaning or similarity, rather than exact matches, vectors shine.

Here are some of their most impactful use cases (many of these are already in production in AI-driven companies):

- Semantic Text Search: Instead of matching keywords, embeddings enable finding text that means the same thing. For example, searching a support ticket database for “reset password issue” could retrieve articles that don’t contain the exact words “reset password” but discuss the concept (e.g. “unable to log in after forgetting passcode”). This is far more powerful for natural language queries and is used in semantic search engines and enterprise document search.

- Question-Answering and Chatbots (Retrieval-Augmented Generation): Traditional LLM-based applications use a vector database as a knowledge base. All reference documents are embedded and stored; when the LLM needs to answer a question, the database embeds the question and finds the most relevant chunks of text to pass to the LLM to generate a response. This architecture, known as retrieval-augmented generation (RAG), powers many modern AI assistants and chatbots.

- Image and Video Similarity Search: As described above in our example, vector databases enable searching for images by image content. This is used by companies for deduplication, content moderation, copyright detection, or providing visual recommendations. Likewise for video or audio: embeddings can enable searches like “find me songs that sound like this audio clip” or identify video scenes similar to the sample provided.

- Recommendation Systems: Instead of manually defined features, many recommender systems generate an embedding for users and an embedding for items (based on viewing history, preferences, item attributes, etc.), and then use a vector search to find the closest items to a user’s embedding. A vector database can serve as the engine that, given a user’s profile vector, returns the top N most similar product vectors, which, on the user’s end, become personalized suggestions.

- Hybrid Searches (Semantic + Filters): As we’ve covered, one of the biggest strengths of vector databases is hybrid searching—combining semantic relevance with structured filtering. For example, a job portal might let you search for candidates similar to a given ideal profile (using vector similarity on resumes), but you might also want to filter by location or required credentials. The combination of vector search with structured filtering (via metadata) makes this possible.

- Anomaly Detection & Security: In cybersecurity or fraud detection, unusual behaviors can be identified as outliers in the vector space. By embedding sequences of actions or log events, vectors that fall far from any known cluster can be flagged as potentially anomalous. While not a replacement for dedicated anomaly detection systems, a vector-based approach can capture complex patterns in behavioral data embeddings.

These are just a few examples. Essentially, any application that involves searching by similarity or semantic content can benefit from a vector database.

Popular Vector Database Solutions and Examples

The growing importance of vector databases has created a vibrant ecosystem of solutions, ranging from open-source projects to fully managed cloud services. Below are some popular choices, each with its unique strengths and ideal use cases.

Open-Source Solutions

Weaviate (explore)

Weaviate is an open-source, AI-native vector database known for combining vector similarity search with structured data filtering. Built in Go, it offers a user-friendly experience and modular plugins, simplifying the integration of embedding models.

-

Advantages:

- Built-in modular support for various embedding models

- Easy setup and intuitive hybrid querying (semantic + keyword search)

- Robust community support and documentation

-

Best for:

Developers looking for flexibility, simplicity, and powerful hybrid search capabilities in a user-friendly package.

Qdrant (explore)

Qdrant is a high-performance, Rust-based open-source vector search engine designed for reliability and speed. It’s efficient enough to operate on minimal resources, making it ideal for both cloud deployments and edge applications.

-

Advantages:

- High-speed searches powered by HNSW indexing

- Lightweight and resource-efficient (ideal for edge devices)

- Simple and clear API with broad language support

-

Best for:

Teams prioritizing speed and efficiency, especially those working in resource-constrained environments.

Milvus (explore)

Milvus is a distributed, highly scalable vector database aimed at enterprise-grade AI applications. Backed by Zilliz, Milvus supports billions of vectors and integrates easily with common AI frameworks like PyTorch and TensorFlow.

-

Advantages:

- Exceptional scalability and distributed architecture

- Supports multiple indexing methods (HNSW, IVF, PQ, etc.)

- Enterprise-grade reliability and integrations

-

Best for:

Enterprises handling large-scale AI workloads and complex queries requiring significant scalability.

LanceDB (explore)

LanceDB is a lightweight, embedded vector database built specifically for handling multimodal AI data. Utilizing Apache Arrow, it allows efficient querying of large-scale embeddings directly within applications or notebooks.

-

Advantages:

- Embedded and lightweight (no separate servers required)

- Efficient multimodal data handling

- Excellent integration with Python and JavaScript apps

-

Best for:

Developers who prefer embedding vector search capabilities directly into their applications or research environments.

Postgres (pgvector) (explore)

Pgvector brings vector search capabilities to PostgreSQL, allowing you to integrate similarity search directly into your existing relational databases. Ideal for moderate datasets, it lets you avoid introducing a new database into your stack.

-

Advantages:

- Leverages PostgreSQL’s relational capabilities and robustness

- Easy integration into existing SQL workflows

- Suitable for moderate-scale vector searches

-

Best for:

Projects already using PostgreSQL where simplicity and integration outweigh specialized vector search performance.

Fully Managed Cloud Solutions

Pinecone (explore)

Pinecone was among the earliest fully managed vector database services, offering scalable vector search via a simple cloud API. Available on platforms like AWS and GCP, Pinecone abstracts away infrastructure management, making vector search accessible and easy to scale.

-

Advantages:

- Fully managed (no infrastructure maintenance required)

- Simple API and seamless scalability

- Proven reliability for production-grade applications

-

Best for:

Teams wanting hassle-free deployments, reliable performance, and easy scalability without infrastructure headaches.

Redis Vector Store (explore)

Redis’s vector capabilities, part of Redis Stack, leverage its renowned in-memory performance to deliver ultra-fast vector searches. Ideal for real-time applications where latency matters most.

-

Advantages:

- Lightning-fast, in-memory vector searches

- Real-time search performance

- Integration with Redis’s rich ecosystem

-

Best for:

Real-time systems like recommendation engines, gaming matchmaking, and any use-case demanding ultra-low latency.

Vespa (explore)

Vespa is a mature, open-source search platform capable of blending semantic vector searches with traditional keyword queries seamlessly. Originally developed by Yahoo, Vespa supports large-scale, distributed search operations.

-

Advantages:

- Supports combined semantic and keyword search seamlessly

- Highly scalable and distributed architecture

- Robust, production-tested solution

-

Best for:

Organizations needing to perform complex searches at scale, combining vector and traditional text searches in one platform.

Other Notable Mentions

- ElasticSearch/OpenSearch: Popular text search engines now incorporating native vector search.

- Microsoft Azure: Azure's native vector search options available within Cosmos DB.

- MySQL Vector Search: Plugins and managed services (like PlanetScale) providing basic vector similarity features for MySQL.

- FAISS: An influential, widely used library for vector indexing often integrated into other vector database systems.

Integrations and Ecosystem Compatibility

Most vector databases offer straightforward integration through SQL-like queries or simple get/put-style APIs, typically via Python client libraries. This makes it easy to plug them into existing machine learning pipelines without needing to rewrite the codebase. In many cases, switching between vector databases is more about performance or cost tuning than rearchitecting your system.

In addition, frameworks like cognee, LangChain, and LlamaIndex offer abstraction layers on top of vector stores, allowing you to easily swap between backends as your infrastructure needs evolve.

From Keywords to Meaning: Making Data Speak Your Language

Vector databases have marked a significant leap forward in how we handle data in the AI era. By encoding information into numerical vectors, these databases allow machines to understand and retrieve data based on meaning and similarity, rather than merely matching keywords.

From powering semantic searches on enterprise documents to recommending visually similar products, vector databases quietly enable the more intuitive, intelligent experiences that users have come to expect. They’re becoming critical infrastructure in recommendation systems, chatbots, anomaly detection, and anywhere that understanding context and meaning matters.

Of course, vector databases aren’t a one-size-fits-all solution. They introduce complexities—like computing embeddings and managing specialized infrastructure. However, as AI applications grow and unstructured data multiplies, their benefits increasingly outweigh these costs. With open-source projects flourishing and cloud providers everywhere integrating vector search into their databases and services, it’s clear that this technology is here to stay.

Here at cognee, we’ve been developing a platform designed to streamline the integration of vector databases with knowledge graphs, enabling your AI to better understand your data and deliver smarter results. Want to see how cognee could simplify and supercharge your data workflows?

Not quite ready for a demo? Check out our deep dive on how cognee works under the hood to see how we combine vector search with knowledge graphs to make your data smarter, more connected, and easier to work with.

OpenClaw Agents: 3 Viral Use Case Ideas Powered by Cognee

How Cognee Builds AI Memory