Google ADK × cognee: Agents That Remember What Matters

🧠 TL;DR: Plug cognee into Google ADK as a long-running tool and your Gemini agents gain a shared, persistent graph + vector memory that survives across sessions. Store contract- and workflow-related facts once and have agents retrieve them via natural language queries, skipping hand-built state hacks in favor of just two tools that sit naturally in the stack. This plugs directly into ADK’s emerging context-aware agent framework, where long-lived memory is a first-class part of the system, not an afterthought.

Over the last year, the agent ecosystem around Google’s Agent Development Kit (ADK) has moved from “toy assistants” to production multi-agent systems: orchestration workflows, long-running jobs, dispatchers coordinating sub-agents, and agents that sit alongside existing enterprise systems.

However, as Google’s own architecture notes put it, the longer agents run and the more they collaborate, context management—not model size—becomes the bottleneck. For example, the agent will do all the right things in one run: call tools, pull fresh data, piece it together, land on a good decision… and then forget all of it once the session ends.

When you spin it up again, it’s back to zero: no record of contracts it saw last Monday, no shared facts between a “sales assistant” and a “research analyst”, no way to pick up a thread that started days ago. That might be tolerable for demo bots, but it has real repercussions when you’re wiring agents into weeks-long workflows that involve many users and teams.

Dumping everything into a giant prompt is not the way to go—it drives up token costs, slows responses, and still drops important pieces on the floor. So, ADK’s answer is a context stack that separates durable state (sessions, memory, artifacts) from the per-call “working context” that actually goes into the LLM.

The question is—what does that durable memory look like in practice, and how do you make it feel like a first-class part of the ADK tool stack rather than a side project with its own database and glue code?

That’s exactly what the ADK × cognee integration is meant to answer, by providing your agents with a shared, persistent memory layer they can access the same way they call any other tool.

How cognee Turns Memory into an ADK Tool

cognee provides a structured memory layer that pairs graphs with vectors and persists beyond any single agent run. Here’s what it brings to an ADK setup:

- Native integration: cognee works exactly like any other

LongRunningFunctionTool, aligning with ADK’s event model instead of bolting on a separate service pattern. - Natural language search: Agents ask questions in plain English and get back structured results; you don’t have to hand-code retrieval logic for each use case.

- Graph + vector synergy: cognee automatically extracts entities, relationships, and structure from raw text, and embeds data as vectors, so searches can retrieve semantically and factually connected information.

- Session isolation: Multi-tenant support with clean data boundaries per user or organization.

- Cross-session persistence: Memory that outlives agent instances, conversation threads, and application restarts.

This aligns perfectly with how ADK wants you to architect agents:

- ADK treats a tool as any capability that lets an agent interact with the world—a function, an external API, a database, or another agent.

- ADK’s function tools and long-running tools are explicitly designed for integrating custom backends—including proprietary data stores and complex pipelines.

cognee fits this “tool-first” ethos by offering a single logical memory backend—a graph + embeddings + retrieval engine—that you expose to agents with just two tools. That keeps your agent configs clean: memory is “just tools,” not a parallel infrastructure track you have to manage separately.

On cognee’s side, the integration reuses the same three core primitives:

.addto ingest content,.cognifyto turn it into a knowledge graph with embeddings, and.searchto run semantic queries that combine vector similarity with graph traversal.

The ADK integration wraps those into concurrent-safe tools designed to run alongside the rest of your agent stack.

Why Graph + Vector Memory Fits ADK So Well

Plain vector stores are good at “find text like this text”, but they have a hard time expressing structure—contracts tied to companies, industries, values, and terms; projects linked to owners and dates.

cognee layers graphs on top of vectors so that ADK agents can:

- Answer fuzzy questions with vector search, and

- Follow relationships when the query is about structure, not just wording.

Because the integration is built as long-running tools, agents call this memory layer the same way they call any other function or API.

How ADK Agents Store and Retrieve with cognee

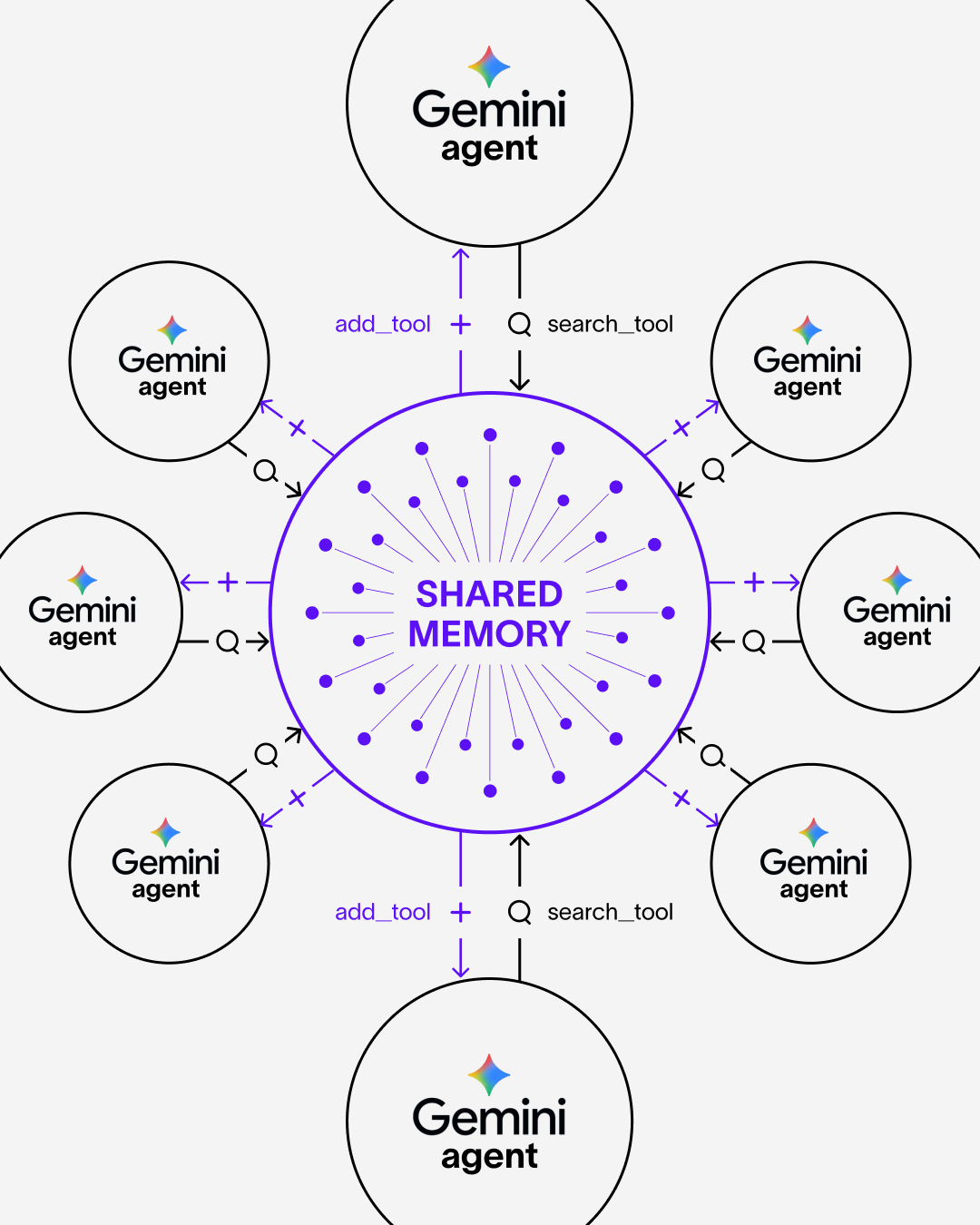

From the agent’s POV, cognee shows up as two tools:

add_tool: Ingests information into cognee's memory layersearch_tool: Queries stored knowledge using natural language

Conceptually, the loop looks like this:

Storing

When an agent calls add_tool with raw text—for instance, a contract summary—cognee:

- Extracts entities (companies, dates, industries, values).

- Establishes relationships between them (e.g. “company X –[CONTRACT]→ value / sector / term”).

- Generates embeddings to support fuzzy text queries.

- Indexes everything into a persistent store.

Retrieving

When the agent later calls search_tool with a question such as:

“What healthcare contracts do we have?”

cognee combines:

- Vector similarity (which text mentions “healthcare contracts”)

- Graph traversal (which contracts are linked to the healthcare sector)

and returns structured context, for example:

“HealthBridge Systems: £2.4M, Feb 2023–Jan 2026, healthcare sector.”

That means the memory an agent sees is not “top-k nearest paragraphs,” but a structured slice of the knowledge graph it can reason over and include in its response.

Quick Start: Your First ADK Agent with Persistent Memory

Here’s a minimal example of an ADK agent using cognee as its memory layer:

That's it. The agent now has persistent memory, which lives in cognee, not in the runner or process. You can shut down the app, start a new instance, and keep querying the same knowledge.

No database setup, no embedding configuration, no retrieval pipelines to maintain.

Real-World Demo: Memory That Outlasts Restarts

Persistence matters most when you have different agents or processes looking at the same data ecosystem.

For example, a sales assistant agent logs a contract on Monday, and a research analyst agent pulls it up on Wednesday—from a completely fresh agent instance with no shared state or conversation history:

The link between these agents is cognee’s graph. This is true persistent memory—knowledge that outlives any single agent instance.

From a production perspective, this pattern is what many enterprise RAG + KG architectures aim for: agents that can answer questions like “What healthcare contracts did we sign with UK providers in the last fiscal year?” by traversing graph-structured knowledge rather than trawling raw documents.

Session Isolation: Multi-Tenant Memory

Real systems need agents that can partition memory for a specific person or tenant without leaking data across accounts.

cognee’s sessionized tools handle that by giving each session its own graph slice (can be extended for session caching for short-term memory or direct access management):

Session IDs can stand in for user IDs, tenants, or orgs, and isolation is enforced through cognee's NodeSet mechanism. Each session gets its own cluster in the knowledge graph, enabling easy separation from others without sacrificing the benefits of a shared backend.

How Session IDs Work

Session object tracks the interaction history, while the session_id you pass into cognee defines which graph partition the agent is allowed to see.

Visualizing the Memory Layer

The graph that backs your agents isn’t a black box. You can inspect it directly to understand what your system has actually stored:

Open the resulting HTML and you’ll see:

- Entities: Companies, industries, dates, monetary values

- Relationships: Which company belongs to which industry, contract timelines

- Session Clusters: Data grouped by session ID, showing clean isolation

This is useful both for debugging (“did the agent store what I think it stored?”) and for explaining behavior to stakeholders.

On the operational side, cognee’s tools are built to handle ADK’s parallel calls. Adds are queued and batched with appropriate locking, so you can run multiple long-running agents without racing to rebuild indexes or corrupting state.

Technical Deep Dive: Async Processing & Concurrency

Google ADK agents can make concurrent tool calls. The integration handles this through async queuing:

Why This Matters

cognee's data pipeline has two stages:

add(): Imports data, doesn't rebuild the knowledge graph yetcognify(): Processes everything and rebuilds the graph

The queue system batches multiple add() calls, waits for a quiet period (2 seconds), then runs cognify() once. This prevents:

- Race conditions during concurrent tool calls

- Redundant graph rebuilds

- Wasted compute on incremental updates

By handling batching and locking inside the integration, you ensure the ADK runtime only sees a clean, high-level semantic contract:

“add_tool eventually makes this piece of information available in the knowledge graph.”

…and the details of graph builds happen behind the scenes, but still within ADK’s event-driven, observable execution model.

LongRunningFunctionTool Integration

The tools use Google ADK's LongRunningFunctionTool wrapper for async operations:

This ensures proper handling of async operations within ADK's execution model. Because ADK treats tools as first-class, you can also compose cognee with other tools.

Pre-Loading Data in the Background

You can also pre-ingest data into cognee for onboarding agents to existing knowledge bases or batch processing of documents, then keep interactions fast by letting the agent pull only the relevant slice when needed.

Here’s how this works:

Patterns for Using Shared Memory in Production

Once you have a shared, persistent memory layer wired into ADK, the vast array of game-changing use cases become obvious. Here are some below.

Knowledge Accumulation

Agents can log insights from emails, meetings, calls, or logs into cognee, then query across the entire history later—like tracing every Phoenix project interaction across days.

This pattern mirrors use cases, where scattered data sources such as reports, emails, and system logs are turned into a unified knowledge graph that supports semantic search and insight discovery.

Seamless Handovers Between Agents

One session sets context (“We were debugging auth issues for Guardian Insurance yesterday”), another agent picks up that same context later without having to replay transcripts or carry around custom state files.

Here, the “thing you were working on” is not just a string in conversation history; it’s a graph node connected to related files, tickets, and design decisions if you also feed those into cognee.

Team Play Across Specialized Agents

Collector agents focus on pulling data in, analyst agents focus on querying it. You can scale this to squads: feedback trackers, compliance checkers, strategy agents—all reading from and writing to the same graph-backed memory.

This pattern is increasingly common in agentic RAG architectures, where one set of agents focuses on exploration and ingestion, and another on reasoning and explanation.

Because ADK is multi-agent-native, you can scale this into full team-of-agents setups: a collector that tracks product feedback, a compliance reviewer that flags risky content, and a strategy agent that asks high-level questions—all sharing the same cognee-backed memory layer.

Getting cognee Memory Running in ADK

- Installation:

- Environment Setup:

- Basic Usage:

If you already have an ADK agent running, adding persistent memory is often as simple as:

- Installing the package and setting keys.

- Adding

add_toolandsearch_toolto your agent definition. - Choosing what the agent should store and when to call

search_tool.

Advanced Memory: Time, Cleanup, and Feedback

Once the basics are in place, cognee exposes more controls for shaping your agent’s memory:

-

Enable time-aware links during

cognifyso questions like “What expired last month?” or “What contracts end next quarter?” can be answered by following date relationships, not just scanning text. -

Use Memify to refine graphs after ingest: tighten up entities, elevate key facts, and trim noise without tearing everything down and rebuilding from scratch.

-

Feed user or system ratings back into the graph to nudge which edges and facts are preferred, so frequently useful information surfaces more readily over time.

You still interact with these through the same memory layer—the difference is that you now get to steer how it evolves as your agents and workloads grow.

Leaving Demo Bots in the Dust: Memory Makes Agents Real

Agents that forget every run are good for quick experiments. Agents with a shared, persistent memory layer, on the other hand, start to look like real systems: they accumulate knowledge, survive restarts, hand work off between each other, and give users consistent answers based on everything that has happened so far.

Building agents that remember isn't just a feature—it's the foundation for AI that truly understands your context. The Google ADK × cognee integration makes that foundation trivially easy to build on.

Context Graphs: Why Agent Memory Needs World Models and Behavioral Validation

Claude Agent SDK × cognee: Persistent Memory via MCP (Without Prompt Bloat)